Nowadays, the mobile is not compatible with portrait mode or with augmented reality effects. Each device has different hardware, but the different development platforms that are made available to developers make work easier. Google, in a new publication, has made it official a new tool for these processes.

The company comments that real-time iris tracking it is one of the keys to improving computational photography and augmented reality. This makes sense since, if the mobile is capable of estimating the position of the iris in real time, it will be easier for you to estimate the position of the rest of the elements.

MediaPiPe Iris: Google’s technology to improve the camera of our mobiles

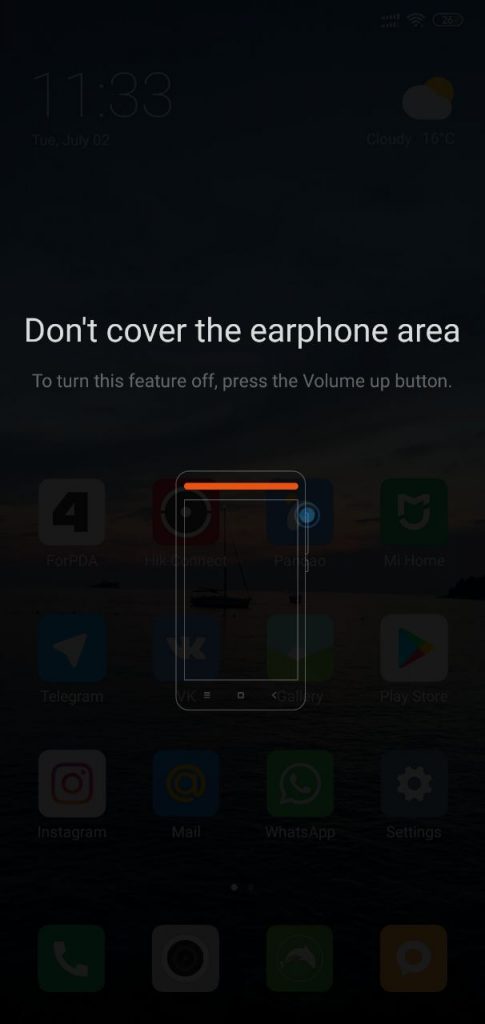

Google says that tracking the iris in real time is a difficult task for mobile phones, due to the available resources, the variable light conditions and the presence of different occlusions, such as squinted eyes, some hair or object that covers the eyes and the rest. To improve this they have launched MediaPipe Iris, a new machine learning model which comes to achieve a more accurate estimate of the iris.

The new Google model opens many doors, by working with a single RGB sensor without the need for specialized mobile hardware

This model, according to the company, is able to track different landmarks that involve both the iris, the pupil and the contour of the eye itself. The best point here is that the model is capable of working on a single RGB camera, so you don’t need specialized hardware like 3D sensors, dual cameras, and more. Thus, any phone with a selfie camera could use the model. Google promises that with this model subject to camera distance can be determined with relative error less than 10%, a pretty good level of precision.

The iris depth estimation is made from a single image, from the camera’s own focal length. This is especially useful since allows the mobile to know the exact distance we are from it, which opens many doors both at the accessibility level and at the augmented reality level.

To check the effectiveness of this tool, Google has compared it with the depth sensor of the iPhone 11. They say that with the Apple device the margin of error is less than 2% for distances of less than 2 meters, while the Google device has an average relative error of 4.3%. As can be seen, the error is quite low for no specialized hardware teener, and allows to obtain reliable data on the metric depth and position of the iris.

Track | Google